- What We Do

- Agriculture and Food Security

- Democracy, Human Rights and Governance

- Economic Growth and Trade

- Education

- Environment and Global Climate Change

- Gender Equality and Women's Empowerment

- Global Health

- Humanitarian Assistance

- Transformation at USAID

- Water and Sanitation

- Working in Crises and Conflict

- U.S. Global Development Lab

Digital Strategy

Speeches Shim

The emergence and adoption of digital technology leads to a multitude of benefits, but it also introduces risks. In an increasingly digital world, communities can find themselves socially or economically marginalized if they choose, for reasons of tradition or cultural preservation, not to opt in to the changing society around them. For those who do opt in, online forms of harassment can exacerbate existing inequalities and conflict dynamics. If left unaddressed, these vulnerabilities can lead to extensive political, social, and economic damage and, ultimately, derail a country’s Journey to Self-Reliance.

THE PERSISTENT DIGITAL DIVIDE

Multiple, stubborn digital divides exist between those who have access to digital products and services and those who do not—between urban and rural communities, indigenous and non-indigenous populations, young and old, male and female, and persons with or without disabilities. These divides are not isolated to the poorest countries and frequently persist even when national-level access improves. Closing these divides wherever they exist is key to achieving USAID’s goals.

Private-sector investments in digital infrastructure often exclude areas and populations for which the business case cannot be readily justified or the risk is too burdensome. Marginalized populations might require public investment to aggregate demand, lower the cost of market entry, and extend connectivity to previously unreached areas—a role USAID is well positioned to play through the use of our funds, flexible authorities, partnerships with technology companies, and technical expertise to mitigate risk and to “crowd in” public and private resources.

WOMENCONNECT CHALLENGE: BRIDGING THE GENDER DIGITAL DIVIDE

Around 1.7 billion women in low- and middle-income countries do not own mobile phones, and the gap in using the Internet between men and women has grown in recent years.43 In 2018, USAID launched the WomenConnect Challenge to address this gap. With a goal to enable women’s and girls’ access to, and use of, digital technologies, the first call for solutions brought in more than 530 ideas from 89 countries; USAID selected nine organizations to receive $100,000 awards. In the Republic of Mozambique, the development-finance institution GAPI is lowering barriers to women’s mobile access by providing offline Internet browsing, rent-to-own options, and tailored training in micro-entrepreneurship for women by region. Another awardee, AFCHIX, creates opportunities for rural women in the Kingdom of Morocco and the Republics of Kenya, Namibia, and Sénégal to become network engineers and build their own community networks or internet services. The entrepreneurial and empowerment program helps women establish their own companies, provides important community services, and positions these individuals as role models.

At the same time, emerging technologies can pose new challenges to inclusion. Because AI-enabled tools often rely on machine-learning algorithms that use historical data to detect patterns and make predictions, they can reproduce or amplify biases that might be present in those data.44 The February 2019 Executive Order on Maintaining American Leadership in Artificial Intelligence states, “[t]he United States must foster public trust and confidence in AI technologies and protect civil liberties, privacy, and American values in their application.”45 Similarly, the Principles on Artificial Intelligence endorsed by the Organisation for Economic Cooperation and Development (OECD), adopted by 42 countries including the United States, stress the importance of human rights and diversity, as well as building safeguards and accountability when designing systems that rely on AI.46 We must balance the adoption of new technologies with a measured assessment of their ethical, fair, and inclusive use in development.47

Similarly, one billion people in the world, mostly from developing countries, lack appropriate means of identification (ID),48 which creates a divide between those who can prove their identity and those who cannot, and excludes large groups from civic participation and access to public services. As we move into a world with increasingly present digital ID systems, we run the risk of further excluding people if these systems are not carefully designed and deployed. Host-country governments or USAID partners must not adopt tools that exacerbate existing inequities, which would harm already-marginalized people and undermine trust in the organizations that deploy these tools, and instead ensure that digital systems and tools equitably benefit affected populations.

THREATS TO INTERNET FREEDOM AND HUMAN RIGHTS

As articulated in the U.S. National Cyber Strategy, the United States is committed to ensuring the protection and promotion of an open, interoperable, reliable, and secure Internet that represents and safeguards the online exercise of human rights and fundamental freedoms—such as freedom of expression, association, religion, and peaceful assembly.49 For many people across the globe, reality does not reflect this ideal state. According to Freedom House, the global state of Internet freedom in 2019 declined for the ninth consecutive year, which presents challenges to democracy worldwide.50 These threats are not new, but they are taking on new forms in a digital age.

One major threat to digital ecosystems is what some have termed digital authoritarianism, in which a repressive government controls the Internet and uses censorship, surveillance, and data/media laws or regulations to restrict or repress freedom of expression, association, religion, and peaceful assembly at scale.51 Authoritarian governments also threaten freedom of expression and movement by encouraging the design and use of online systems for surveillance and social control—for example, by promoting and adopting digital facial-recognition systems that enable the passive identification of citizens and visitors.52 The rise of digital authoritarianism is especially concerning during times of complex emergencies, when lack of access to information can hinder the delivery of humanitarian assistance. Consistent with our Clear Choice Framework and Development Framework for Countering Malign Kremlin Influence, USAID will continue to champion Internet freedom by driving multi-stakeholder conversations related to Internet governance and supporting commitment to Internet freedom and human rights around the globe.

USAID’S PARTNER ORGANIZATIONS COUNTER ONLINE HATE SPEECH

Experience from USAID’s programs suggests that media literacy alone is not sufficient to address the volume of hate speech circulated on online platforms. Beginning in 2015, USAID has funded partners in Southeast Asia to reduce the impact of hate speech on underlying community tensions, which can ultimately lead to riots, forcible displacement, and death. USAID’s partner organizations produce and distribute messages to raise awareness about hate speech, both locally and with relevant authorities on global platforms. Our implementers also work closely with local leaders to build their awareness of hate speech and tailor online and offline interventions to community dynamics. USAID’s experience indicates no one is better-positioned than local organizations to demand independent audits and apply the pressure necessary to hold platforms accountable to the ideals of transparency and accuracy of information.

HATE SPEECH AND VIOLENT EXTREMISM ONLINE

The same digital tools that allow governments, businesses, and civil society to connect efficiently and at scale enable individuals and organizations with hateful or violent ideologies to reach potential followers and recruits. The United States is clear in our commitment to exposing violent extremism online and working with local partners and technology platforms to communicate alternatives.53 This includes implementing programs to counter violent extremism that are focused, tailored, and measurable, as articulated in the USAID Policy on Countering Violent Extremism in Development,54 and an explicit call to understand how to counter violent extremism and hate speech through digital platforms.55

THE INFLUENCE OF ONLINE MISINFORMATION AND DISINFORMATION ON DEMOCRATIC PROCESSES

Recent events have shown the ability of misinformation and disinformation campaigns to sow distrust and undermine democracy.56 Particularly during periods of political transition, misinformation can create as much harm as disinformation.e Furthermore, the push to correct misinformation is often a thinly veiled cover for the disinformation efforts of authoritarian or would-be authoritarian governments. As USAID-funded programs work to increase the digital influence of local partners, the Agency must prepare staff and partners to anticipate and respond to coordinated disinformation campaigns against their work.

Both state and non-state actors are adopting efforts to pollute the information available on the Internet. In addition to traditional methods (for example, using fake accounts and websites to spread divisive messages), these actors can buy followers, employ automated bot networks, manipulate search engines, and adopt other tactics used by counterfeiters to confuse and persuade. Furthermore, technologies that enable “deep fakes” not only can deepen societal divisions, shape public perceptions, and create “false facts” and “truths,” but also lead to actual conflict and lend significant advantages to violent non-state adversaries.57 USAID and our interagency U.S. Government partners are committed to coordinating efforts to counter misinformation and disinformation generated by state and non-state actors58 and funding supply- and demand-side interventions to reach those ends.59

NEW RISKS TO PRIVACY AND SECURITY

Digital-information systems increase the availability of data and the ease of its storage and transfer, which breaks down the “transaction costs” that have historically served as de facto protections of data privacy.60 This increased ease of access compels us to reassess how we conceptualize privacy protections in a digital age. As many communities USAID and its partners serve come online for the first time, we must provide resources to, and help develop the capabilities of, partners to enhance the safeguarding of personally identifiable information (PII) and other sensitive information. Even datasets scrubbed of PII might, when merged and analyzed together, expose individuals to reidentification.61 Additionally, it is now possible to discern sensitive information, such as someone’s political leanings or sexual orientation, simply through tracking his or her online behavior or mobile devices.62 As it becomes easier to create a “mosaic” of someone’s identity from disparate pieces of digital data, norms and definitions of privacy are proving anything but static.

Privacy risks are particularly acute in humanitarian crises, where displacement and uncertainty increase vulnerability, and recipients of aid can feel pressured to share personal data in exchange for urgent assistance. Threats to privacy can come from nefarious actors who engage in doxing63 and digital intimidation, but they can also come from unwittingly harmful actors—groups who might not have proper security protocols in place, for example. Conversations related to the responsible protection and use of data cannot be separated from conversations related to the benefits of open data for transparency and the flow of information for international trade.

Cybersecurity risks can jeopardize a country’s infrastructure and services at a national level. Ukraine experienced the first known cyber attack on a power grid in December 2015, when 225,000 people lost power.64 The country experienced another cyberattack in June 2017, which affected computer systems, automated teller machines (ATMs), an airport, and even the radiation-monitoring system at the Chernobyl nuclear plant, before spreading worldwide.65 In 2016, hackers stole $81 million from Bangladesh’s central bank by infiltrating its computer systems and using the SWIFT payment network to initiate the transfer.66

These examples demonstrate the potential economic impact and damage to trust in public institutions due to cybersecurity failures. Much like terrorist attacks, high-profile cyber attacks can undermine the legitimacy of governments by highlighting their inability to protect their citizens from harm.

In a digital ecosystem, the frontlines of defense against cyber threats and data breaches (and often the most vulnerable points) are a country’s workforce: engineers, bank managers, government officials, or development practitioners. Because of the critical role the workforce plays in maintaining cybersecurity and recovering from cyber attacks, it needs adequate digital skills and training; the right processes, policies, or systems; and an appropriately protective legal and regulatory environment. In support of the Journey to Self-Reliance, USAID, in partnership with other U.S. Government Agencies and Departments, plays an important role in building the cyber capacity of partner-country governments and industry; promoting regulations and laws that protect privacy and freedom of expression; uniting industry, government, and educational institutions to develop a highly qualified cybersecurity workforce; and increasing the digital literacy and digital security of citizens.

The responsible use of data requires balancing three key factors, which can sometimes be in tension, as detailed in Considerations for Using Data Responsibly at USAID. The use of data helps maximize the effectiveness and efficiency of our programs. Privacy and security help avoid unintentional harm to both the subjects of data (people described by data) and the stewards of data (organizations that collect, store, and analyze data). Transparency and accountability require sharing data as broadly as possible with host-country governments, U.S. taxpayers, and the people directly affected by our work. Effectively navigating these complex issues is critical to maintaining trust in digital systems and creating opportunities for beneficial innovation.

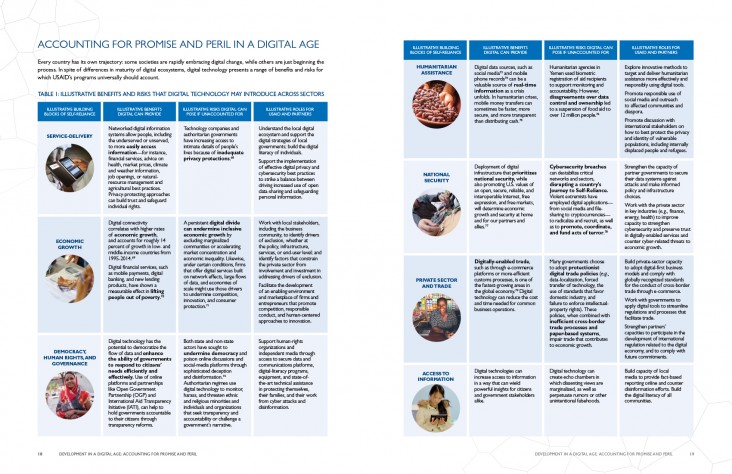

ACCOUNTING FOR PROMISE AND PERIL IN A DIGITAL AGE

Every country has its own trajectory: some societies are rapidly embracing digital change, while others are just beginning the process. In spite of differences in maturity of digital ecosystems, digital technology presents a range of benefits and risks for which USAID’s programs universally should account.

e. Misinformation refers to factually inaccurate content distributed regardless of whether there is an intent to deceive, while disinformation refers to factually inaccurate content distributed intentionally for political, economic, or other gain.

Comment

Make a general inquiry or suggest an improvement.